Lesson Summary:

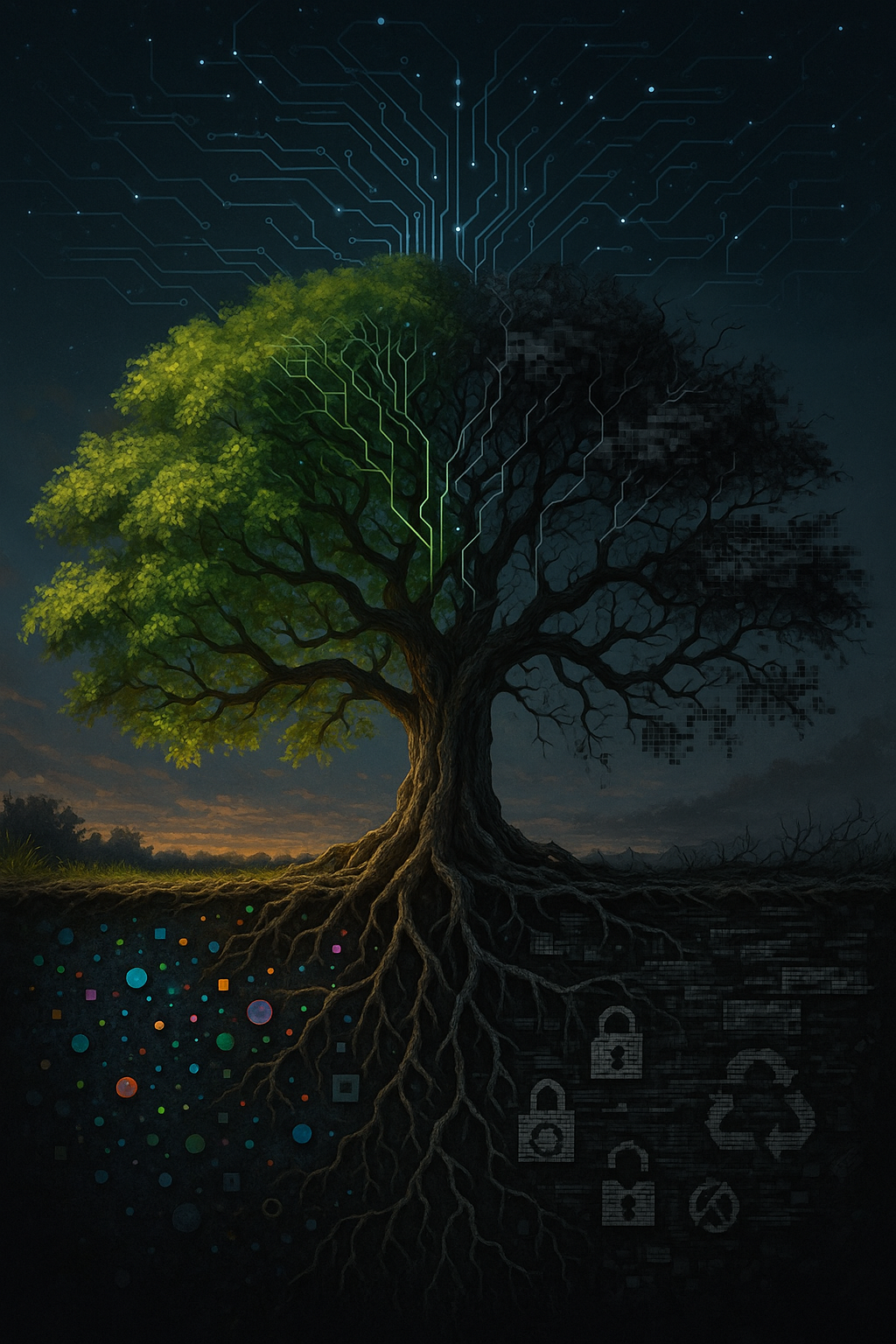

Algorithms are mirrors: they reflect what we feed them. In this lesson you’ll trace the journey from raw data to real-world impact, uncovering how skewed or incomplete training sets spawn biased outcomes—from unfair loan denials to faulty facial-recognition arrests.

Data Is Destiny: Why the Training Set Matters

Imagine training a sommelier on only two wines—both sweet, both cheap. Every future bottle, no matter how rich or subtle, would be judged against that narrow palate. Algorithms work the same way. Feed them skewed or incomplete data and they’ll echo those distortions at industrial scale.

Weights & patterns form atop the data we choose (or overlook).

Feedback loops amplify early mistakes—especially when decisions feed back into new training data.

Real people feel the consequences: mortgage rejections, misidentified suspects, or medical risk scores that miss entire communities.

Bias in the Wild: Three Quick Vignettes

Healthcare Risk Scores – A widely used algorithm underestimated Black patients’ needs because cost, not illness severity, was its proxy metric. Result: fewer referrals for critical care.

Resume-Screening LLMs – State-of-the-art models ranked “Emily” higher than “Lakisha” despite identical credentials, reflecting historical hiring bias.

Facial Recognition Arrests – Misidentification rates on darker-skinned faces led to wrongful detentions, spotlighting systemic flaws in training datasets.

-

The Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) is a risk assessment system used by courts to predict the likelihood that a defendant will reoffend. Although promoted as a neutral predictive tool, substantial evidence shows that COMPAS acts as a form of digital profiling, especially along racial lines..

-

UnitedHealth ran a risk score (OPTUM) that used past spending to determine future care. But the math forgot that Black Americans may not be spending less because they’re healthier, but because they’ve been systematically denied access. The algorithm mistook neglect for resilience and coded the disparities into a new format.

-

When given neutral prompts such as “doctor” or “CEO,” AI art models like Stable Diffusion overwhelmingly generate images of white individuals in business attire.

Analyses have confirmed that prompts for high-status or authority roles routinely favor whiteness, irrespective of the context, reinforcing outdated norms about leadership and professionalism.

-

Researchers at the University of Washington created over 3 million job applicant profiles with various combinations of race- and gender-associated names, then submitted these to the search tool on a major online job platform (indeed.com) to observe which jobs were recommended.

-

Digital technologies such as AI and machine learning are increasingly being used in health and care. However, because older people are underrepresented in data used to train AI…and are less likely to use digital devices, the output from these tools may systematically disadvantage older people, a form of digital ageism.

A Timeline of Bias

-

Study finds racial bias in Optum algorithm. Healthcare Financial News. Publisher Link

AI tools show biases in ranking job applicants' names according to perceived race and gender. University of Washington News. Publisher Link

IBM overview of algorithmic bias examples. IBM. Publisher Link