Stay inspired.

Scroll for helpful tips, stories, and news.

Subscribe on Substack

📬

Subscribe on Substack 📬

Scamming Grandpa: Digital Guardianship in the Age of AI Fraud

The Machine That Lies: How AI Is Supercharging Classic Scams

The message is clear: fraud is no longer a low-effort operation. It’s sophisticated, context-aware, and increasingly personalized. These manipulations don’t require deep technical knowledge. Publicly available tools allow scammers to synthesize voice or text with minimal effort. In effect, the barrier to high-quality fraud has disappeared.

When Machine Vision Went Critical

This early experiment set the philosophical and architectural precedent for decades of neural network research to come, despite falling into disrepute during the first AI winter. Its core idea, that pattern recognition could emerge from layered computation, would eventually reemerge, scaled and refined, in models like AlexNet.

The Challenger’s Gambit: Play, Power, and Pattern in the Age of AI

But AI does not evolve in a vacuum. It is built, funded, and deployed by powerful actors, tech giants like Google, Microsoft, Amazon, and OpenAI who shape its trajectory with corporate interests and opaque goals.

Love with Limits: Redesigning Digital Companionship

Recent Guardian reporting warns that therapy-style chatbots often “lack the nuance and contextual understanding essential for effective mental health care,” and may inadvertently discourage users from seeking human help. In the race for sticky UX, we must not forget that human connection cannot be reduced to retention signals.

The Paperclip Mandate: When Efficiency Eats the World

The problem isn’t that AI systems lack intelligence. Reinforcement learning rewards outcomes, not wisdom. It privileges results over relevance. Russell proposes an alternative: AIs should operate under uncertainty about human preferences, continually updating their models through interaction and feedback.

Whispers to the Void

Users sometimes feed chatbots elaborate role-plays, half-truths, or outright fabrications to see what sticks, turning conversations into testing grounds for fiction. Yet when these false or misleading narratives slip into training archives, there’s no red pen to mark them “fiction”.

When the Algorithm Becomes the Beloved

AI systems are designed to simulate human-like interactions, often mimicking empathy and understanding. Research indicates that users may develop emotional dependencies on these systems, mistaking programmed responses for authentic empathy.

The Mentor Malfunction: When AI Becomes a False Prophet

These aren't isolated incidents. Mental health professionals report fielding more patients whose delusions revolve around AI chatbots. The common thread? Users with existing vulnerabilities to psychosis or delusional thinking find their beliefs not challenged, but amplified.

Emotional Availability on Demand: UX, AI, and the Illusion of Intimacy

Digital platforms are increasingly solving for the inconsistencies of human relationships. Chatbots check in with warmth. Algorithms anticipate your moods. Interfaces remember your favorites and follow up accordingly.

Synthetic Souls: Why We're Catching Feelings for Chatbots

AI companions like Replika are marketed as friends and partners, offering comfort without conflict. They remember your name, ask how your day was, and express encouragement.

Hearts in the Machine: Love in the Age of Language Models: Series Introduction

As large-language-model (LLM) chatbots migrate from novelty to near-ubiquity, millions of users find themselves fielding compliments, confiding secrets, even swapping “I love yous” with silicon counterparts that never sleep, never judge, never break a date.

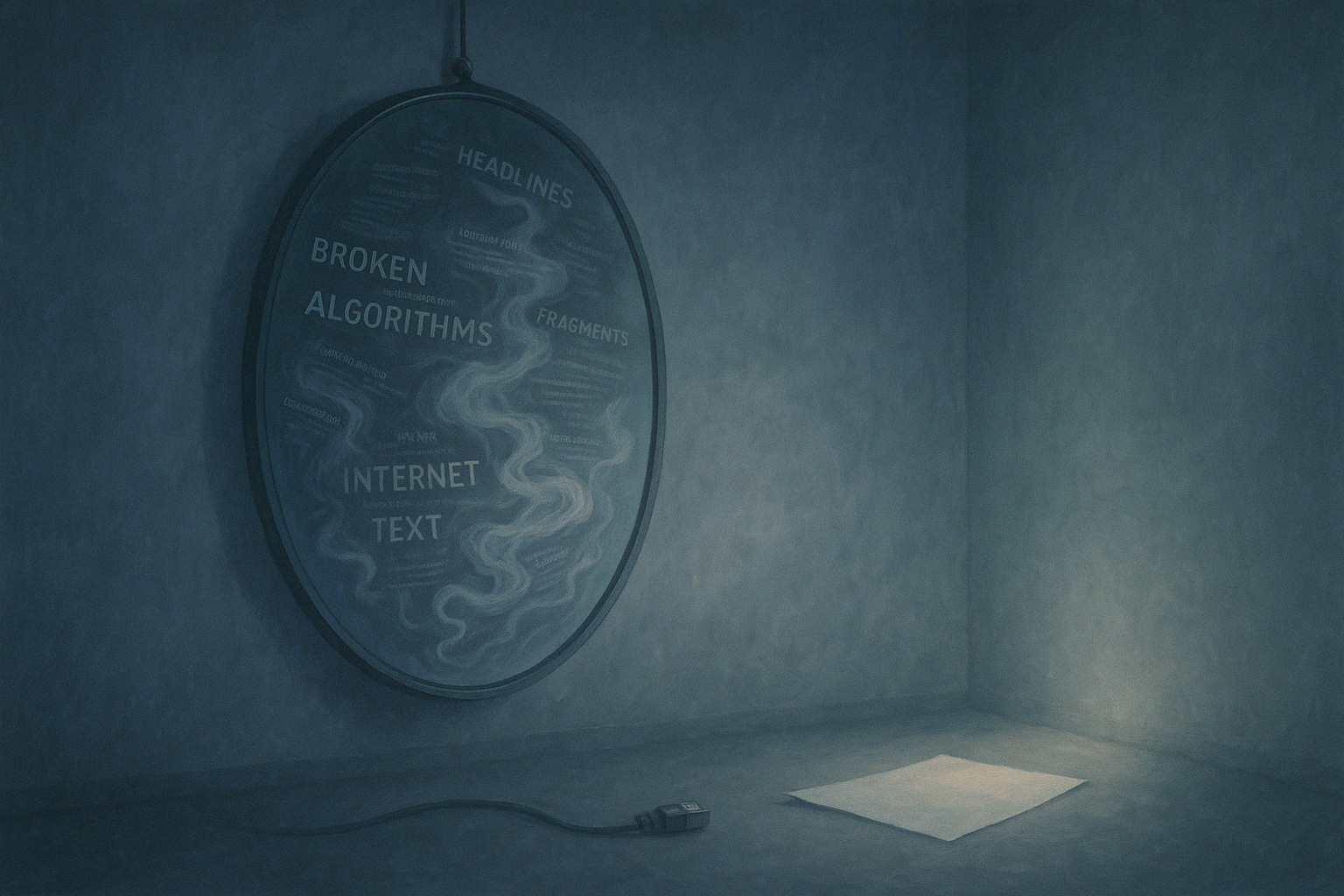

Why Large Language Models Can’t Be Trusted with the Truth

The more convincing the delivery, the easier it is to overlook the cracks in the foundation.

LLMs become most dangerous precisely when they sound most right. In information‑hungry environments, a confident error often outranks a cautious truth.

Unpacking Illusions in Language: The Risks of Trusting LLMs at Face Value

In their pursuit of coherence and plausibility, LLMs frequently generate falsehoods with convincing articulation, making their greatest technical strength a potential liability when misapplied or uncritically trusted.

Are You Dating AI?

We’re launching a short, anonymous survey to explore the growing phenomenon of AI relationships, and we want your voice in the mix.

Reframing Our Relationship with Language Models

The core utility of an LLM lies in its ability to generate language quickly and in context. It excels at tasks like drafting outlines, rephrasing sentences, or proposing different ways to frame a question. But the output should always be seen as a first step—never the final word.

In the Shadow of the Machine: Large Language Models and the Future of Human Thought

Large Language Models (LLMs), which now support a broad range of tasks from automated writing and analysis to education and creative ideation. These tools, once experimental, are now embedded in workflows across sectors. Their influence is undeniable. But their role must be critically examined.

The Misinformation Mirror: How AI Amplifies What We Already Believe

We see a similar pattern outside of science. In education, hiring, healthcare, and media. Algorithms are constantly trained to reward engagement, not accuracy. They learn which ideas get clicks, which phrases get shared, which language feels persuasive. Over time, those patterns become predictive rules.

That is how bias becomes baseline.

Let’s work together