Lesson Summary:

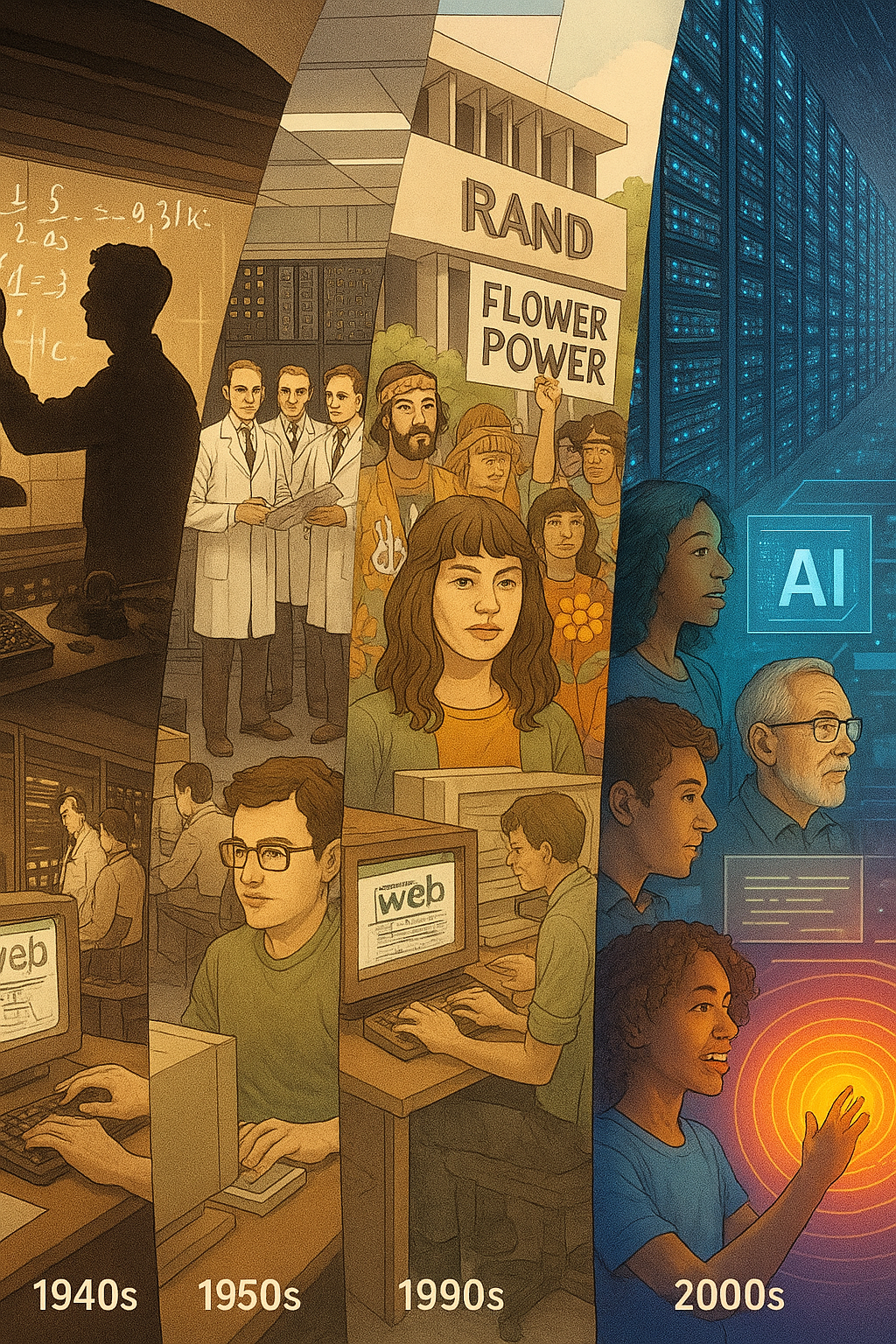

Journey through seven decades of artificial intelligence history, from Alan Turing's revolutionary question "Can machines think?" to today's ChatGPT revolution. You'll discover how war, economics, and human ambition have driven AI through dramatic boom-and-bust cycles, and why understanding this history helps us navigate AI's future. Meet the visionaries, survive the "AI winters," and see how each era's social needs shaped the technology we use today.

“We can only see a short distance ahead, but we can see plenty there that needs to be done.”

Picture this: World War II rages across Europe. In a hidden mansion called Bletchley Park, a quirky mathematician named Alan Turing is trying to crack the "unbreakable" Nazi Enigma code. The pressure is immense—each day of delay costs thousands of lives.

Turing's radical solution? Build a machine that could think through possibilities faster than any human team. His "Bombe" machine didn't just help win the war—it planted a revolutionary seed: What if machines could actually think?

The Question That Started Everything

In 1950, Turing published a paper asking, "Can machines think?" But he did something clever. Instead of defining "thinking" (philosophers had argued about that for centuries), he proposed a practical test: If a machine could convince a human it was human through conversation, wasn't it intelligent?

This "Imitation Game" (now called the Turing Test) transformed a philosophical question into an engineering challenge. The race was on.

Why History Matters for Your AI Journey

- Pattern Recognition: AI has gone through multiple hype cycles. Recognizing them helps you separate real breakthroughs from marketing buzz

- Social Context: Every AI advance reflects the fears and dreams of its time

- Future Navigation: Those who don't learn from AI history are doomed to repeat its failures

Let's dive into the four great waves that brought us from Turing's dream to today's AI revolution.

1939: When the Unthinkable Became Thinkable

-

Optimism Unlimited

- 1950: Turing Test proposed

- 1956: "Artificial Intelligence" coined at Dartmouth Conference

- 1958: Perceptron (first neural network) created

- 1966: ELIZA chatbot fools users into thinking it's a therapist

- Key Driver: Cold War competition & government funding

- Crash: Overpromises led to funding cuts

-

Rules Rule Everything

- 1980: Expert systems boom in corporations

- 1982: Japan's Fifth Generation Computer project launches

- 1986: Backpropagation algorithm revives neural networks

- Key Driver: Corporate automation & Japanese competition

- Crash: Expert systems proved brittle and expensive

-

Let Data Decide

- 1997: Deep Blue defeats chess champion Kasparov

- 2000s: Machine learning powers search engines

- 2011: IBM Watson wins Jeopardy!

- Key Driver: Internet data explosion & cheaper computing

- Foundation: Set stage for deep learning

-

The Current Tsunami

- 2012: ImageNet breakthrough

- 2016: AlphaGo defeats world champion

- 2020: GPT-3 shows language understanding

- 2023: ChatGPT reaches 100M users fastest in history

- Key Driver: Big Data + GPU computing + algorithmic breakthroughs

- Status: Still rising...

The Four Waves of AI Development

The Four Waves of AI Development: A Journey Through Hype and Progress

Artificial Intelligence has evolved not as a steady climb upward, this document explores four distinct waves—from the Golden Age of the 1950s through today's Deep Learning revolution—revealing patterns that help us understand where AI is headed and how to navigate its promises and limitations.

Notice the pattern: each wave starts with breakthrough optimism, crashes when promises exceed reality, but leaves behind crucial innovations that enable the next wave. We're currently in the highest wave yet—but history suggests caution alongside excitement.

-

Why He Matters: Created the theoretical foundation for all computer science

Born to a middle-class British family, Turing showed early brilliance but struggled socially. His work at Bletchley Park saved an estimated 14 million lives by shortening WWII. Despite his heroism, he was persecuted for being gay and died tragically at 41.

Key Contributions:

- Turing Machine (1936): Proved what computers could theoretically compute

- Bombe Machine (1940): Cracked Enigma code

- Turing Test (1950): Defined artificial intelligence challenge

- ACE Computer (1945): Early stored-program computer design

His Prediction: By 2000, computers would fool humans 30% of the time. (He was remarkably close!)

-

Why He Matters: Coined "Artificial Intelligence" and created LISP programming language

A mathematical prodigy from a working-class Boston family, McCarthy believed AI could help humanity solve its greatest challenges. He organized the legendary 1956 Dartmouth Conference where AI was born as a field.

Key Contributions:

- Named "Artificial Intelligence" (1955)

- Created LISP language (1958): Still used in AI today

- Developed time-sharing (1959): Enabled interactive computing

- Founded Stanford AI Lab (1963): Trained generations of AI researchers

His Vision: AI would create a post-scarcity society where machines did all labor

-

Why He Matters: Built first neural network learning machine & co-founded MIT AI Lab

A polymath who was also an accomplished pianist, Minsky believed understanding AI would help us understand human consciousness. Ironically, his 1969 book critiquing simple neural networks helped trigger the first AI winter.

Key Contributions:

- SNARC (1951): First neural network learning machine

- Confocal microscope patent: Revolutionized biology research

- "Society of Mind" theory: Intelligence emerges from simple agents

- Advisor to Stanley Kubrick for "2001: A Space Odyssey"

-

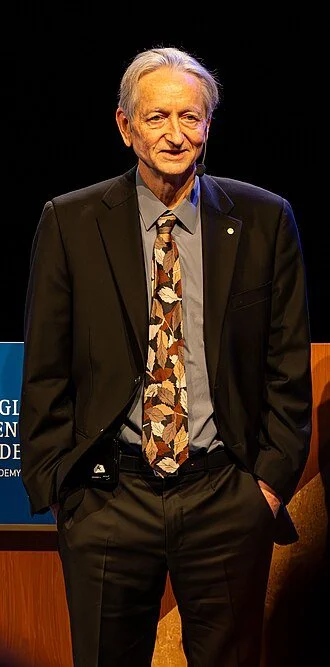

Why He Matters: Kept neural networks alive during AI winter & sparked deep learning revolution

British-Canadian researcher who persisted with neural networks when everyone else gave up. His 2012 ImageNet victory launched the current AI boom. In 2023, he quit Google to warn about AI risks.

Key Contributions:

- Backpropagation refinement (1986): Made training deep networks practical

- Deep Belief Networks (2006): Showed how to train many layers

- AlexNet (2012): ImageNet breakthrough that started deep learning era

- Capsule Networks (2017): Attempting to fix neural network limitations

His Warning: "I used to think AI danger was 30-50 years away. Now I'm not sure we have that long."

-

Why She Matters: Created ImageNet dataset that enabled the deep learning revolution

Immigrated from China at 16, worked in dry cleaning to support family while excelling at physics. Realized AI needed massive, organized data to learn like children do. Her ImageNet project was initially mocked as "stamp collecting."

Key Contributions:

- ImageNet dataset (2009): 14 million labeled images that transformed AI

- Co-founded AI4ALL nonprofit: Increasing diversity in AI education

- Co-founded Stanford HAI: Human-centered AI research

- Advocate for ethical AI: "AI is made by people, for people"

Her Insight: "We realized the problem wasn't the algorithms—it was the lack of data"