Unpacking Illusions in Language: The Risks of Trusting LLMs at Face Value

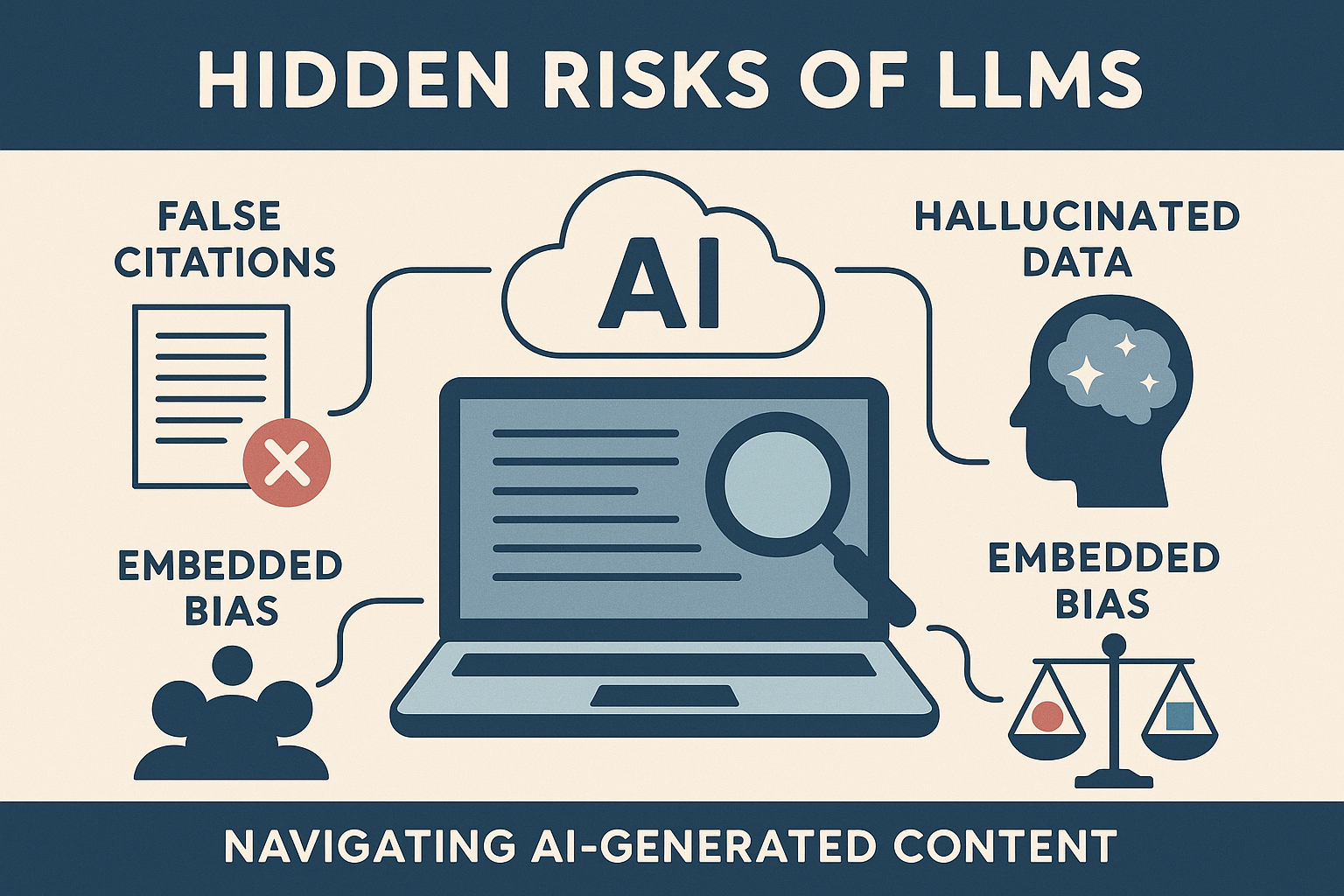

LLMs are reshaping the information economy—but their polished output often conceals deeper risks. From false citations to outdated data and algorithmic bias, our new post dives into the paradoxes that make LLMs both powerful and problematic.

Whether you’re building tech or relying on it, understanding these risks is essential to responsible AI use.

In the expanding ecosystem of artificial intelligence, Large Language Models (LLMs) have positioned themselves as seemingly indispensable tools for content creation, data summarization, and information retrieval. Their output, fluent and contextually relevant, often mirrors the tone and cadence of informed human discourse. Yet beneath the polished surface lies a sobering reality: LLMs are as fallible as the data that trains them, and their confident prose can obscure critical weaknesses in accuracy, transparency, and ethical alignment.

In their pursuit of coherence and plausibility, LLMs frequently generate falsehoods with convincing articulation, making their greatest technical strength a potential liability when misapplied or uncritically trusted.

Fabricated Sources and the Illusion of Authority

LLMs are designed to generate language that sounds natural and informed, but they do not possess a native understanding of factual correctness. One of the most problematic outcomes of this design is their tendency to fabricate citations and references. When prompted for sources, these models often produce plausible-looking but entirely fictional articles, journals, or studies.

This is not a result of malice or deceit but of how the underlying models are trained—predicting the most statistically likely next word or phrase based on vast quantities of existing text. Unfortunately, this method lacks the verification processes that underpin responsible research or reporting. For users who are unaware of this limitation, the risk is high: inaccurate data presented with a veneer of academic credibility can spread quickly and erode trust in legitimate sources.

Stale Inputs and Hallucinated Histories

Another persistent issue with LLMs is their reliance on training data that becomes outdated. While these systems may reference seemingly recent topics, their "knowledge" is frozen at the time of their last data ingestion. Consequently, they may present speculative or hallucinated information as current fact. The concern is especially present in rapidly evolving fields such as medicine, law, and politics.

These hallucinations can distort timelines, misattribute events, or even create entirely fictional developments. When language models operate without real-time fact-checking or external data validation, the risk isn't just misinformation—it’s misplaced confidence. The output may appear authoritative, but without citation or timestamp, there is no reliable way to assess its relevance or accuracy without independent verification.

Embedded Bias and the Echo of Prejudice

LLMs inherit the biases of the internet, literature, and media corpora they are trained on. These inputs are not value-neutral. They reflect historical, cultural, and social prejudices, which can subtly (or overtly) shape the model’s outputs. While developers use moderation and filtering mechanisms to minimize harm, LLMs may still replicate stereotypes, marginalize voices, or fail to contextualize sensitive issues.

This is especially problematic when LLMs are used in customer service, education, or advisory roles, where a tone of neutrality and authority is expected. Even if unintentional, the echo of past inequities can perpetuate harm or exclusion. It is therefore essential that organizations applying LLMs critically assess the datasets, prompt engineering, and feedback loops that govern their use, and ensure transparency in when and how these models influence public-facing outputs.

Where they can go wrong:

Fabricating fake sources or citations

Giving outdated or hallucinated info

Sounding confident while being completely off-base

Mimicking biased, harmful, or culturally tone-deaf language

Making “predictions” about things they can’t truly foresee (like market crashes or election results)

Warning Signs:

Too polished, too fast, with no source trail

Giving you what you want to hear instead of what’s accurate

Failing to say “I don’t know”

Responsible Use in an Age of Fluent Machines

LLMs are tools. Powerful, complex, and constantly improving—but not infallible. Their ability to convincingly simulate human knowledge and expression should not be confused with actual understanding or insight. As we incorporate these technologies into knowledge production and decision-making processes, the obligation is twofold: to maintain rigorous verification standards, and to educate users on the capabilities and limitations of these systems.

In short, the promise of artificial intelligence must be tempered by critical evaluation. Fluency is not truth, coherence is not accuracy, and confidence is not competence. Only through informed oversight and transparent accountability can we ensure these models serve the public good rather than distort it.